Table of Contents

Open Table of Contents

Midnight Code Cup

After the 2025 ICPC APAC Championship(which I will write a review of sooner or later), in search for another competitive programming event to quench my thirst for competition, I’ve recently participated in the Midnight Code Cup event last saturday.

Midnight Code Cup is a new event consisting of ‘heuristic’-styled problems, that are quite different from the traditional competitive programming problems you may expect from competitions like ICPC, GCJ, Hackercup and the bunch. It is more similar to Google Hash Code, if you have any experience with that competition which is now sadly left behind history. I’ve managed to find two teammates that I’ve met during university, and we spent 4 hours starting from 10 PM to 2 AM solving the problems during the qualification round. Although I had the most experience with competitive programming, the other two teammates were quite good with mathematics in general, and one of them is also currently a graduate student studying database systems, which could help us greatly in case we encounter any systems programming-related problems. Since all of us didn’t have much experience with similar heuristic competitions, we decided to just join in for the fun of it.

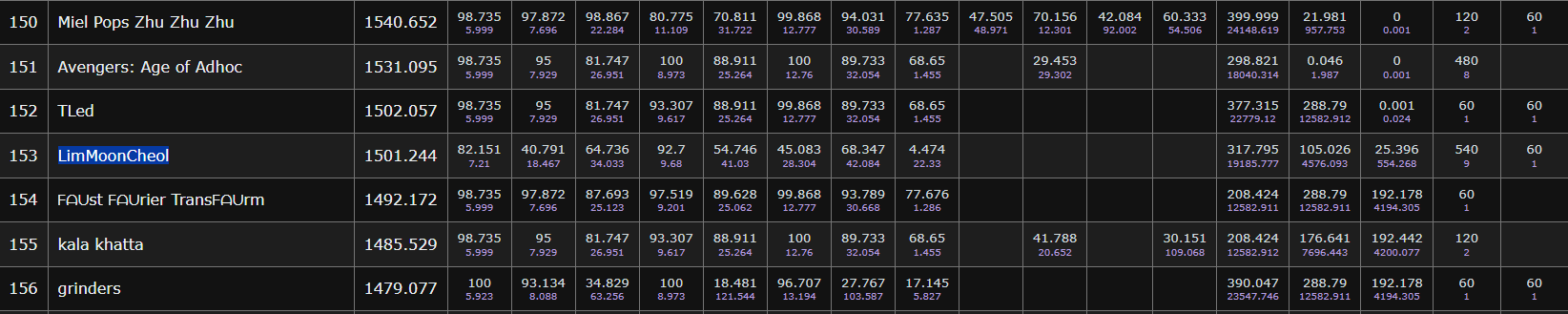

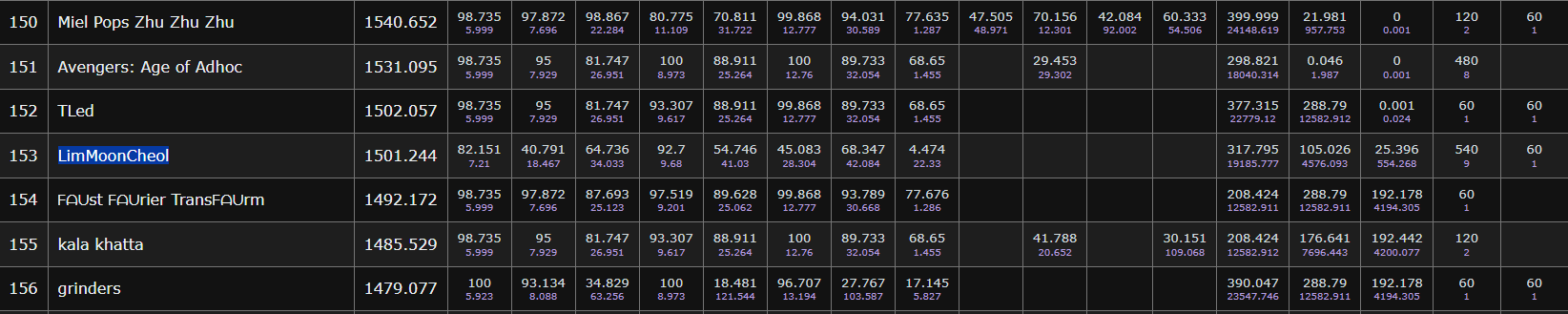

We ended up with a pretty low placement of being right in the middle of the scoreboard, but I think we did a decent job as we were able to at least figure out like 60~70% of what the close-to-optimal approach would be for each of the given problems.

The Strategy

Was that we didn’t have any specific strategy. I had my strengths in implementation and competitive programming-style problem solving, mnxcv had his strengths in mathematics and machine learning, and eff3ct_ was mostly an all-rounder with better experience with systems programming. Since these types of events usually come with a low number of problems and rather require you to squeeze out every tiny bit of optimization for each test case, we decided to take our time and read through all the problems first together and then decide whether to split the problems between each other or to solve them together. We took a look at past problems from events like Code Weekend, and expected similar problems to appear during the competition.

Qualification Round

Before the round started, we were able to take a look at the registered participants, and I was surprised to see some of the teams that I had met during the 2025 ICPC APAC Championship, whom I expected to be one of the strongest participants that I could spot from the list of team names. We figured that the competition might be tougher than expected, but we weren’t too worried about it as we didn’t expect ourselves to make it to the finals. After a bit of meaningless chit-chat, we received the link to the live scoreboard, and the round started.

The round consisted of 3 problems, and we were given 4 hours to solve them. Details for our approach on each of the problems will be described below, but for the most part, we basically split the problems one by one for each of us to handle during the entire round. The problems can be viewed from this google drive link which I think might be gone sooner or later. Oh well.

Problem A - Artistic Choice

I was the one handling this problem, and had spent the entirety of 4 hours implementing and optimizing this problem.

You are given a set of points on a 2D plane, and a 2D robot arm that is rooted at

(0,0). It is guaranteed that for all of the given points, the robot arm can reach them. You are required to find the angle configuration for each joint of the robot arm to reach each point, and the minimum total cost for a hamiltonian path that visits all of the points at least once. The cost between angle configuration A and B is calculated as the maximum angular difference between each of the joints in configuration A and B. The robot arm only consists of two or three joints.

In short, I had to do two things:

- Implement an inverse kinematics solver for 2R/3R robot arms.

- Optimize the hamiltonian path cost with the number of points going up to 2000. Note that there can be multiple possible angle configurations for the joints to reach the same point.

The Inverse Kinematics Solver

The first hurdle seemed like a daunting task to me at first, especially since I had been recently working with robotics simulations for my graduation project. Most IK libraries are built based on the assumption that you provide them with a proper robot configuration file(such as a URDF file), and the generic IK solvers themselves are fairly complicated to implement from scratch. I considered building a URDF file for each of the test cases(12 of them), but it seemed like too much work to get them working with this exact problem scenario.

I ended up trying the k kinematics crate which seemed to allow me to build an IK solver in a rather simple manner with the input data, and spent about an hour or two to get it working. Sadly it didn’t work, and due to the lack of documentation/experience I slowly realized that I may be unable to debug it in time after about 1.5 hours. At this point, I frantically scanned the problem description again in search for a hint that may help trivialize the problem, and then I realized that the number of joints were suspiciously low. Then it hit me that for 2R/3R(the R stands for revolute joints) arms, the inverse kinematics can be rather trivially solved compared to the generic case, making me regret that I should have googled it earlier.

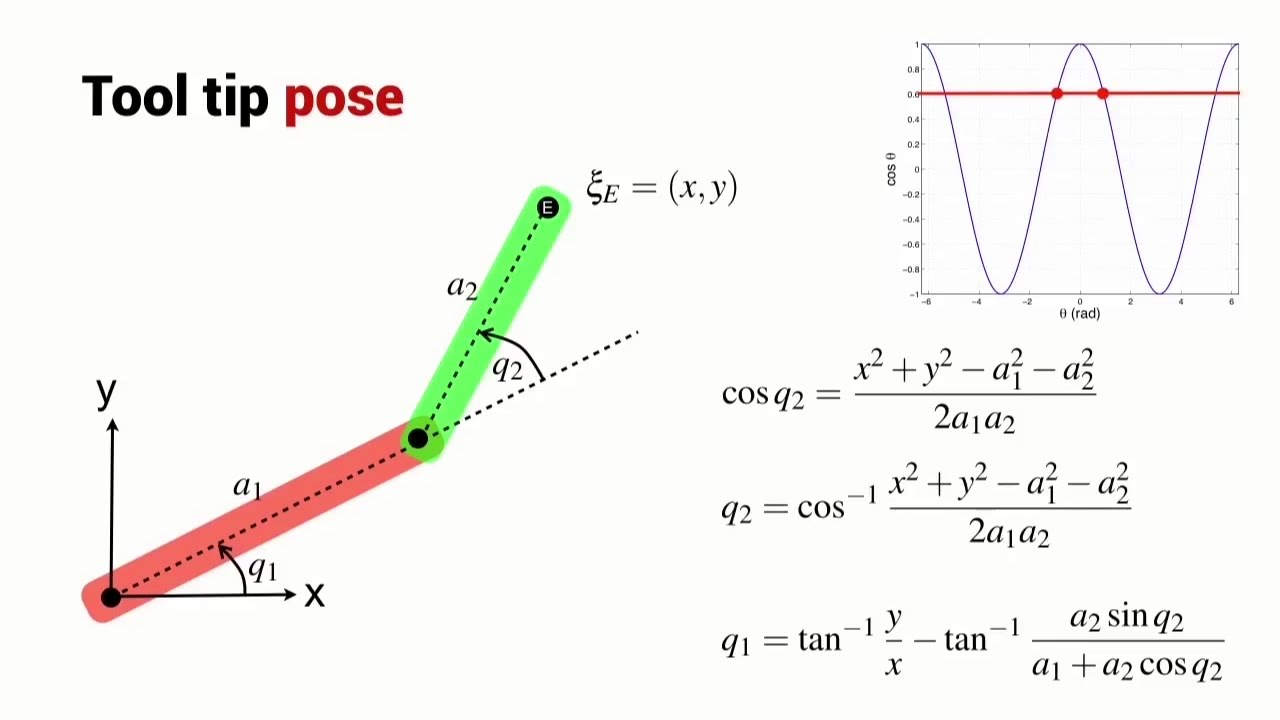

I was also able to quickly learn that the 3R case is much more complicated than the easily solvable 2R case, and decided to start from scratch to just work on the 8 test cases with a 2R robot arm. Thanks to the equation shown above, I was able to retrieve 2 sets of angle configurations for each of the given input points, and now had to solve the next problem of optimizing the hamiltonian path cost.

The Hamiltonian Path Problem

The problem setup is obviously quite different in the fact that the distance between each point isn’t necessarily tied to their euclidean distance, but rather the maximum angular difference between the two joint configurations used to reach each point. In this specific 2R case, since each point has exactly two possible joint angle configurations, it can be transformed into a problem of finding the minimum cost hamiltonian path where each point has a parity value of 0 or 1, and the cost between two points may vary depending on their parity.

It is also well known that the hamiltonian path problem is NP-hard, and with inputs as large as N = 2000, I had to implement some sort of a heuristic algorithm to solve the problem. Although a simple greedy implementation for finding the next closest point may suffice for generic inputs, I imagined that they could be easily countered by carefully crafted test cases, and thus wanted to skip the basic implementation to save time.

I was mostly unfamiliar with many of the popular heuristic algorithms that may be easily applicable for this sort of problem, and had considered the following options:

- Genetic Algorithm

- I did follow a tutorial on implementing this from scratch before, but I wasn’t confident enough on how to implement the crossover and mutation functions for this specific problem.

- Simulated Annealing

- I had implemented this before to solve a few problems on BOJ, and was more confident about it than the genetic algorithm although I was also unsure about the exploration strategy to use in the algorithm.

- Ant Colony Optimization

- I had never implemented this before, but I had heard of it for being a popular approach to solve TSP-like problems.

- And some other algorithms that I was hesitant on implementing for the first time during the remaining 2 hours:

- Beam Search

- Diversified Late Acceptance Search

- Autoregressive Reinforcement Learning with Proximal Policy Optimization

- Ok this is probably way overkill to implement, but I instinctively thought of it when I read the problem due to my graduation project shenanigans.

Since I had already wasted basically half the competition time on the IK part, I decided to go with the simulated annealing approach which I was most familiar with, and was able to quickly implement the baseline template of the algorithm with now having to choose an exploration strategy to use. I had heard of the 2-opt swapping search algorithm to solve TSP problems and decided to take it for a spin, but that didn’t help with the parity issue that appears with the multiple solutions of the IK solver. My final strategy eventually ended up being a combination of 2-opt swapping and random parity flipping being mixed together to be executed with different weights randomly chosen for each iteration. Unfortunately I was unable to implement the IK solver for the 3R case, so this was our final setup while I was tuning the hyperparameters for the remaining 30~40 minutes of the competition. You can take a look at the stripped-out code in rust from this gist.

Problem B - Problemsetter’s Nightmare

mnxcv spent most of this time working on this problem for our team, and I had only briefly read the description.

You are given a classic knapsack DP problem, but with a twist: You are also provided with 3 solution code files written in Python. Your task is to create input data with less than 100 bytes that can turn these solutions ineffective under the given time constraint. The scoring is measured based on how long the execution would have taken for each of these solution files.

It was a problem that many of us competitive programmers would have encountered if you have any experience with hosting a contest/round, and to be honest, I was never really good at coming up with such edge cases to prevent certain unoptimal greedy/flow/backtracking-based solutions.

The solving process was mostly based on observations of the given code files rather than implementing certain algorithms, and I think mnxcv was able to come up with a couple decent test cases that were able to give us a nice score on solutions B1 and B2, with an attempt at B3 as well.

Problem C1 / C2 - Junction Board Assembly

eff3ct_ was the one handling the burden of this problem, and I was mostly unable to help with it as you will discover in the problem description below.

You are given a simple input of numbers(3 numbers for C1, 1 number for C2) in each test case. You are provided with solution files for C1 and C2 written in assembly, and your task is to provide the answers to each test case that would’ve been produced by the solution assembly code. There are 10 test cases each for C1 and C2.

To make matters worse, the assembly code was actually written in/wrapped in a kotlin-based VM, and we were given the implementation of the pseudo-assembly code written in Kotlin. We received no prior notice about having to run Kotlin code for the contest, so naturally we had to spend some time either trying to set up a Kotlin development environment or to find an online IDE.

Obviously, if we could just execute the given code and feed in the input data, we would literally have the required output files, but the problem was that the assembly code seemed to be extremely hard to properly understand what it was trying to compute, and the execution time was abysmal to run anything past the first test case.

We spent a huge amount of time trying to understand the assembly code as we couldn’t really figure out what else to do other than testing random input data and praying that it wouldn’t take too long to run. Eventually after about 3.5 hours, eff3ct_ was able to figure out what the assembly code of C1 was trying to compute:

Given A red balls, B blue balls, and C green balls, compute the number of unique sequences of these balls where no two adjacent balls are of the same color.

I have absolutely no idea how we were supposed to figure this out and how eff3ct_ managed to do so at all, but testing a few small inputs seemed to get the answer right. We were able to code up a solution of time complexity O(N^3), and the maximum input size for N seemed to be about 2000. A funny downside was that the memory requirement reached about 180 GB for our naive solution, but luckily(?) eff3ct_ had access to a server with terabytes of RAM for his research being a graduate student at a database systems lab. With only a small amount of time left, we ran the solution on his server and were able to answer up to 9 out of 10 test cases, with 1 of them being too large to run in time. For C2 we imagined it would require the same process but with a harder hidden problem, and we were unable to figure it out in time.

Post Contest

In the end, I think we had some good fun tackling these problems as they weren’t something you would commonly encounter anywhere else. Aside from mnxcv tackling B, we also somehow found our own adequate matches as I was doing inverse kinematics + policy optimization on A which was somewhat related to my graduation project, and eff3ct_ was doing assembly + combinatorial optimization on C1 which was somewhat related to his research(although maybe a bit far fetched).

One of the things I slightly regret was maybe the implementation language choice of Rust for problem A, as none of the others were able to help with the problem due to inexperience with the language, although we simply didn’t really think of that option at all as we were busy with our own problems too. Ideally, I guess I should’ve separated out the optimization problem to be handled by mnxcv to better suit his strengths and move onto B after finishing the IK implementation on A, but I don’t feel too regretful about it as I doubt the final score would have changed too drastically.

A somewhat pleasant surprise was that I didn’t know that it would be this fun in comparison to other traditional team-of-3 competitive programming events. If only I knew about these types of competitions earlier, I may have participated in other ones like Google Hashcode when it was still around. One could argue that Kaggle provides similar contests regularly, but I feel they are a lot different in the sense that the choice for solutions being mostly reduced to ML and hyperparameter optimization in the end, reducing a lot of the fun found in these heuristic competitions. Hopefully more contests of this type/format will be held in the future, and I will be able to participate in them again.