Table of Contents

Open Table of Contents

SIGGRAPH Asia 2024

So this is a very late review after attending SIGGRAPH Asia 2024 in Tokyo back in december. All of my prior conference experiences were basically in South Korea, and I’ve always wanted to visit events that were held elsewhere targetted towards a more global audience.

SIGGRAPH is one of the most well-known conferences in the graphics programming community, and it is held in various locations around the world. It is probably the largest academic computer graphics conference in the world, and thanks to the uniqueness of Computer Graphics and its relations to the entertainment industry, it also provides a lot of visually stunning showcases and presentations that could be viewed as interesting to non-graphics people as well.

Although I haven’t really shown much of my projects online I personally enjoy graphics programming projects, and SIGGRAPH was one of those events that I’ve always wanted to attend to see for myself. Sadly the main SIGGRAPH event is usually held in the US which was a bit far-fetched for me to attend at the moment(due to me being an undergraduate student), but having the Asia event in Tokyo was a great opportunity for me to attend. So I’ve gathered some friends interested in a trip to Tokyo and perhaps a bit of graphics programming, and we decided to go for it in the middle of the semester.

Much of the motivation for me wanting to go to SIGGRAPH was from what I’ve heard from other graphics programmers that I’ve seen/met online. Most notably, Acerola is a popular(probably the most popular) graphics youtuber that I’ve been watching over the years, and seeing him share his overwhelmingly positive experience at SIGGRAPH kind of pulled my trigger on making the decision to go abroad. Reading another blog post written by Eric Haines, the author of RTR himself approving his review really made me feel like this could be the start of something new for me as well.

SIGGRAPH as an undergraduate student

One of the many things that a lot of undergrads worry about when someone urges them to visit such conferences, is that they assume the entirety of the event would probably be too technically difficult for them to digest(aside from the cost 🙃). Aside from that, many students don’t feel the need to visit such conferences at all when they are simply busy enough studying for themselves already. So what can one do as an undergraduate student at such an academic conference as SIGGRAPH?

Well to be honest, it’s not that much different from what you would be doing as a full time software engineer. Depending on your proficiency level, you can attend the technical sessions that suit your interests, and perhaps try networking around with other attendees you may see around. The only difference is that:

- You’re probably not very proficient at your subject of interest

- You probably don’t have a lot of experience in networking, and probably don’t have a reason for others to be interested in you in particular

- You’re probably not a researcher in the field, which means the academic opportunities are probably not relevant to you

And well, it is true that these probably true statements greatly reduce the number of things you can enjoy at such academic conferences in particular. Indeed, many of the friends I had brought along with me had a hard time digesting the tehcnical sessions, and they couldn’t find much interest in any academic stuff either. I’m not sure how welcoming the main SIGGRAPH event is comapred to SIGGRAPH Asia, but the networking opportunities also felt greatly limited for an undergraduate student with no connections coming from a country abroad. I tried reaching out to several graphics engineers in the field that were registered to the event, but ultimately couldn’t find any that I could meet up with.

However, that doesn’t mean that I had nothing to enjoy from the conference. Being my first time attending such a big academic event, I was mostly interested in any subject that even remotely resembled graphics programming(aside from AI). My main interests were somewhere around real-time rendering, physically based rendering, CGI, geometry optimization and the bunch, mostly classical graphics programming subjects that any graphics programmer may be interested in.

For almost all of these separate interests, there was almost always a dedicated technical session that I could attend to take a look at the latest research and development in the field. These types of sessions vary in format and depth, where some sessions can be very technical and academic(paper-based), while others may have a more casual format focused on visual representation and demos.

Technical Sessions

The technical sessions I had attended could be grouped into the following categories:

-

Birds Of A Feather: An introductory showcase of various technical topics and how they are being used in the industry. Relatively lightweight in terms of technical depth, but it was a good way to see how the graphics industry is evolving with new cutting-edge technologies being introduced in the conference.

-

Featured Sessions: A large-scale featured session focused on a popular showcase of graphics technology applied in the industry. All of these sessions were very high quality, and I would presume that they would’ve all been interesting for any person that has an interest in computer graphics, whether in a technical sense or an artistic sense. My favorite was ‘The (Hex)Tech of Arcane Season 02’, which explored the technical challenges and solutions used in the animated production of the second season of Arcane on Netflix.

-

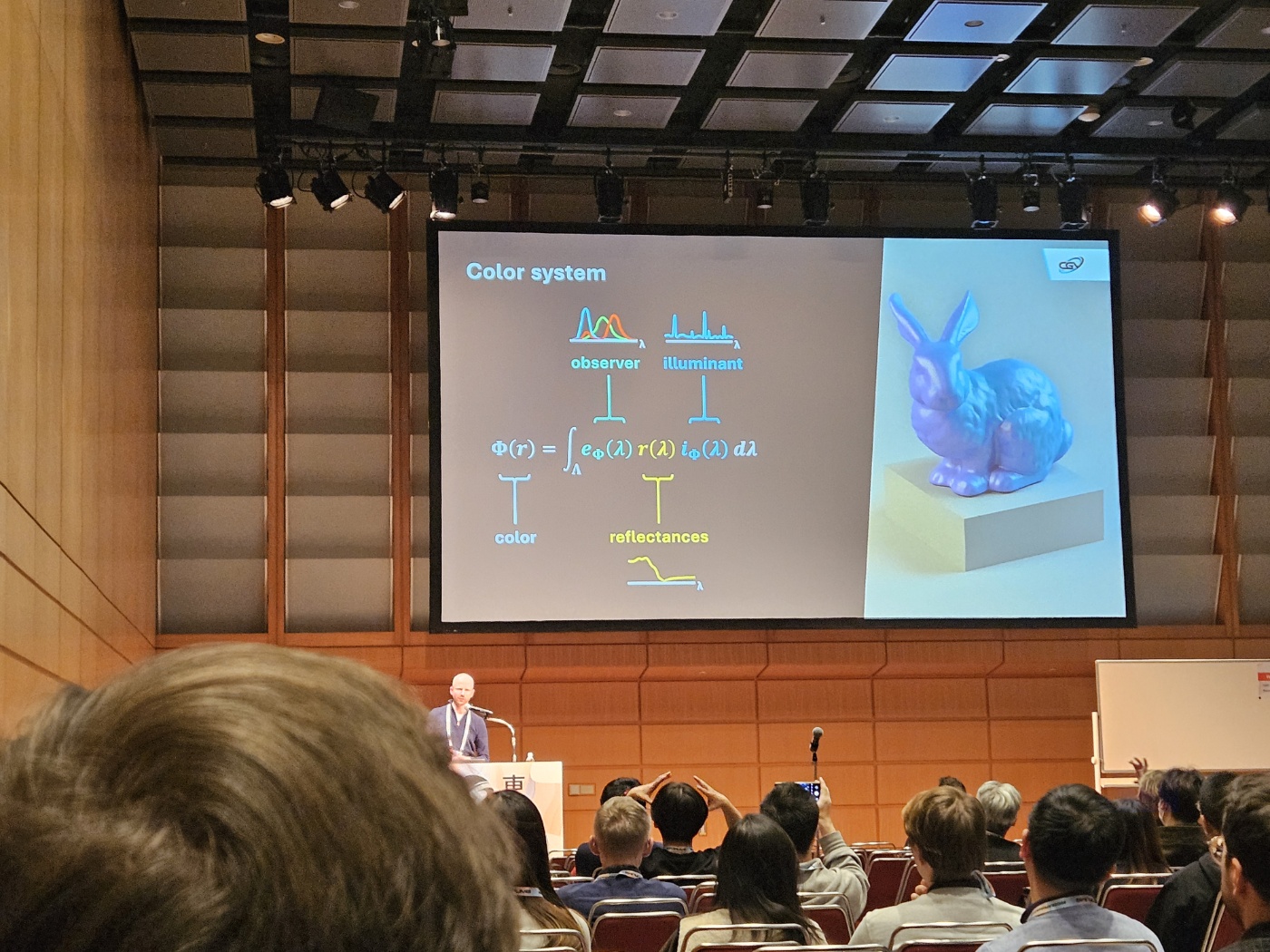

Technical Papers: The most technically detailed type, with researchers explaining their own papers all grouped by a larger category. Due to my late arrival, I couldn’t attend the ones that I had most anticipated(focused on geometry and lighting), but I do recall thoroughly enjoying the ‘Enhancing, Saliency` session. Among many papers, I was quite intrigued by Controlled Spectral Uplifting for Indirect-Light Metamerism, which thankfully the author was kind enough to explain to me in person about his development process after the session. I’ve been wanting to build my own spectral renderer ever since(preferrably with glium + egui or raylib), which hopefully might see the light of day within this year.

Although certain sessions including presentations are unavailable online for public viewing at the moment, you can still find the accepted papers that were showcased in these sessions.

Since there were so many topics being discussed everywhere it would be injustice to summarize everything into a single thematic focus, but speaking strictly from my personal experience based on my attendance, here are some takeaways that I found interesting:

OpenUSD

OpenUSD, a file format to enable interchange of scene information between various 3D graphics software, is being pushed quite aggressively in the industry(by NVIDIA and others)

If you have any sort of experience with 3D graphics, you may have experienced the pain of transferring your scene from one software to another. A common pain point happens when transferring 3D ‘artwork’ made in software like Maya/Blender, over to game/simulation engines like Unreal/Unity. Many have claimed to support seamless transfer in the past, and many have also tried doing so by putting their entire scenes inside the commonly used 3D file formats such as fbx, obj or gltf, but no one has succeeded due to each software maintaining and changing their own format over time, along with the latter approach being inconvenient in reality. OpenUSD is an attempt to standardize the supported interchangeable scene format, and so far the consensus seems to be that it is the most promising solution so far.

‘Mathematical’ Rendering

Of course rendering is already mathematical in nature, but to put more emphasis onto the mathematical property itself, more ‘mathematical’ approahces to rendering are being actively explored that aren’t being used as much in production, such as Differentiable rendering or Gaussian splatting and Radiance fields. Most of what you commonly see in 3D graphics is based on more traditional approaches to rendering, using mesh-based data to perform rasterization, ray tracing, and the bunch.

Differentiable rendering aims to extract more mathematical information from the rendering process(with similar intentions to machine learning) to enable more advanced applications such as inverse rendering, where you can extract the 3D geometry and material properties from a 2D image. Differentiable rendering naturally has a lot of ties with machine learning, which could have some interesting implications when combined with reinforcement learning techniques. A little bit of a side note, but Differentiable Physics Simulation is another hot topic that is being actively explored in Robotics, which seems to quite significantly improve the performance of RL agents in said physics-based environments and real life. I would assume the end goal for differentiable rendering to be somewhat similar but backwards: to more accurately reproduce the observed real-life scene in a 3D environment.

Gaussian splatting and Radiance fields are more recent approaches to rendering that aim to represent 3D scenes using continuous functions rather than discrete meshes, which can lead to more efficient rendering and better transition over from machine learning techniques to graphics. Although they are quite far from being used in a standalone-manner for actual production results due to their performance and quality issues, there has been a lot of ongoing research in this area which you can check out here. The appeal of being able to transfer your real-world data into a 3D scene so easily is quite significant to 3D artists, and the fact that unlike other generative AI techniques to generate images / videos, Gaussian splatting can act as a replacement for traditional 3D mesh data(optimistically speaking), which means that it has the potential to become a drop-in replacement for existing 3D graphics pipelines. The reality of this happening is still probably very far away due to how much mesh-based hardware optimization on the GPU we rely on for real-time rendering, but it opens a new viewing angle on how we should approach graphics pipeline optimization in the future.

Generative AI in Graphics

Generative AI is a bit of a heated topic in the graphics community compared to other computer science fields, because the perception of generative AI in the graphics/art community is rather negative. For two reasons that I have so far observed:

- Copyright issues are still unsolved for outputs from diffusion models trained on who-knows-what kind of data, and a lot of corporations building such generative models have had allegations against them for using copyrighted material without consensus for their training.

- Most AI-based methods applied in rendering that have sprung out ever since the huge boom of diffusion models have been shown to be quite spurious or simply ineffective in terms of application in the graphics industry. Most of them can’t perform well in real-time rendering which takes up a significant portion of the industry, and even for offline rendering they often end up with noticeable artifacts that are simply not acceptable for production quality. In the rare case when they do work, the AI-specific artifacts that are often present in the output every now and then can significantly degrade the perceived quality of the output, which can easily cause frustration for graphics programmers and artists trying to ensure consistent quality across their work. Not to mention that integrating such AI pipelines into existing rendering pipelines is often a very burdensome task with little reward, even with the rare cases when the paper actually describes how it can be implemented in practice instead of hiding it behind terse pseudocode.

- Of course there are still some effective techniques such as NVIDIA’s DLSS or denoisers but most of them touch on the post processing side of rendering rather than the actual rendering process itself.

Althogh people may not show this negative perception directly at offline events and conferences(as many researchers are still interested in said AI subjects as it is much easier to garner attention in academia with them), I could experience this in many other graphics programming communities online, even in ones that are far outside the hands of artists and rather extremely focused on the technical side of graphics. Whenever a new technique was introduced in a paper and it included AI, many readers would simply pass it away as it was almost certain that they would find no use from learning said technique. However since this is still a technical conference at heart, the showcase of generative AI was inevitable, and I was somewhat skeptical about how much I would enjoy the AI-related sessions. Thankfully I was rather relieved to find actually interesting applications of AI-based techniques for topics such as procedural material generation with RL, ‘boosting’ 3D object generation with procedural PBR materials, physics based RL controllers with motion capture for general task instruction and such.

From what I could gather, it seemed like the community was indeed moving towards a more practically-applicable direction with generative AI in graphics, especially with labor-intensive tasks for humans such as physics-based motion control, animation, and PBR materials. I think much lesser people would argue that replacing these cumbersome tasks with AI would be a bad thing, and I could imagine these would help greatly for indie game developers and individual artists in particular as applications of such techniques are often patternized by borrowing pre-built assets for said people. I’m glad to see that we are moving towards a less-conflicting future for generative AI in graphics(although image/video asset generation in general is still a heated topic).

My Review

I would’ve loved to see more of Gaussian Splatting / Path Tracing / Geometry Optimization which were my main interests, but I was still able to see a lot of interesting topics that I could learn from. The technical sessions were very well organized, which helped me understand a lot of new topics that I wasn’t quite familiar with before. I think the main SIGGRAPH event shows a lot more of my personal interests, which I hope to attend in the future if I can.

Exhibitive Sessions

Of course, the technical sessions aren’t everything that SIGGRAPH Asia had to offer. A unique aspect of SIGGRAPH unlike other SIG conferences is that due to the nature of computer graphics and its tie to the entertainment industry, there are a lot of visually stunning exhibitions and showcases that a non-expert viewer can simply enjoy. Specifically, there was a separate exhibition hall that was dedicated to showcasing various works of art / technology / other product showcases of sponsoring companies, which was where most of my friends ended up spending their time after attending a few technical sessions.

Another interesting one was the Computer Animation Festival, which sadly I can’t show since the footage isn’t publicly available online at the moment.

Real-Time Live!

Thankfully the footage of the Real-Time Live! session is available online, since it was.. well, live.

Real-Time Live! was the highlight of the exhibitive sessions, shown at the very last day of the conference. It is a combination of both the technical and exhibitive sessions, showing the most impressive technical applications and advances in real-time graphics.

My personal favorite was the RobotSketch research showcase, where the main author performed a live 3D sketching of a quadrupedal robot(that reminded me of the Dragoon from the game Starcraft), and then instantly animating it, showing it walking around in both a quadrupedal and bipedal manner.

You can view the live footage here.

Conclusion

Overall, I’d say it was absolutely worth it to miss a few days of classes to attend SIGGRAPH Asia 2024 even with the expensive costs for a student. It was absolutely eye-opening to attend a global conference like this, to see so many people from all sorts of backgrounds to gather for one common interest and share their knowledge and experience. Studying something by yourself is one thing, but to meet so many others with the same passion presenting their work really lit a flame inside me to continue my journey in graphics programming, whether it be in a professional or personal sense. Touring around Tokyo was another non-negligible plus as the city was just absolutely pleasant to wander around, and I would definitely be visiting it again in the near future.

It also makes me wonder what the main SIGGRAPH event would be like, and what other conferences of different subjects would be like. I personally am hoping to attend either SIGGRAPH/SIGGRAPH Asia or RustConf within this year or the next, although the exact plans would only materialize once I get to sort out my post-graduation schedule along with a new full-time position. I’d love to visit RustConf 2025 this year, but I’m not sure if I could manage my PTO days to attend it depending on how my new job goes. In the long term, I would love to be a speaker at such conferences, and finally be able to experience the other side of the conference rather than just being an attendee. I think it would be a great way to share my own experience and knowledge with others, and hopefully inspire them to pursue their own interests in graphics programming. It’s a fun subject, I promise!

It has also never been so useful to learn graphics programming in the age of AI, as being able to utilize GPU compute really starts to give you a lot more power in terms of what you can do with your code, along with experiencing all sorts of concurrency pitfalls and pipeline issues that would strengthen your programming skills in general. I’ve recently started to explore the world of Vulkan for the purpose of both my current passion projects(graphics applications) and my potential future interest(ML backends), since thanks to the cross-platform nature of Vulkan(along with the fact that the shaders can be written directly in SPIR-V instead of a high level shading language), it is being more frequently utilized as a GPU compute backend for various applications outside from just CUDA/ROCm/OpenCL. Hopefully I could make up something interesting enough with it to sort of pivot my way into start sharing my work around as a first step.

Anyways, if you’re by any means interested in computer graphics / graphics programming and have the opportunity to attend SIGGRAPH Asia or the main SIGGRAPH event, I would highly recommend you to go for it. If it is your first time attending such a conference, you are bound to have a great time as long as you have studied enough on your own to understand the latest technical jargon being thrown around. Try to bring some colleagues/friends together, and try to have some fun while you’re at it.